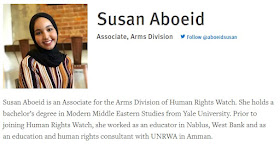

In late May, I addressed a panel on the militarization of digital spaces – specifically on how Israeli authorities use surveillance technologies to deepen systemic discrimination against Palestinians. I warned that the use of autonomy in weapons systems was a dangerous part of this trend and highlighted the urgent need for an international legal response. ......Israeli authorities are also developing autonomous weapons systems. These trends, globally reflect a slide towards digital dehumanization, and in the case of Israel this means of Palestinians. ... A senior Israeli defense official said recently that authorities are looking at “the ability of platforms to strike in swarms, or of combat systems to operate independently, of data fusion and of assistance in fast decision-making, on a scale greater than we have ever seen.”Incorporating artificial intelligence and emerging technologies into weapons systems raises a host of ethical, humanitarian, and legal concerns for all people, including Palestinians. Campaigners have called for the adoption of a treaty containing prohibitions and restrictions on autonomous weapons systems, which many countries, including Palestine, have joined.Autonomous weapons systems could help automate Israel’s uses of force. These uses of force are frequently unlawful and help entrench Israel’s apartheid against Palestinians. Without new international law to subvert the dangers this technology poses, the autonomous weapon systems Israel is developing today could contribute to their proliferation worldwide and harm the most vulnerable.

That last paragraph assumes that Israel is inherently immoral and therefore any AI-assisted weapons it develops must also likely be immoral by design.

This is the opposite of the truth.

There is no doubt that there are many potential dangers of AI. Any decision that an AI makes independently could easily be a wrong decision, and malicious actors could use AI deliberately to violate the laws of war.

In the end, though, AI is just another tool that could be used for good or bad. HRW's assumption that Israel's use of AI would only be for violating international law shows how biased the organization is.

Israel has been exploring AI in warfare for years. Its position mirrors that of the US, UK, Australia and others that AI could actually save lives in combat.

Israel's position a decade ago shows that it has given far more thought about the moral implications of this issue than HRW ever did when it launched its own shallow campaign against "killer robots." At a conference on Lethal Autonomous Weapons Systems (LAWS) in 2013, the Israeli delegate made it crystal clear that Israel is only planning to use AI as a tool where a human is the one who makes all of the important decisions:

In order to ensure the legal use of a lethal autonomous weapon system, the characteristics and capabilities of each system must be adapted to the complexity of its intended environment of use. Where deemed necessary, the warfare environment could be simplified for the system by, for example, limiting the system's operation to a specific territory, during a limited timeframe, against specific types of targets, to conduct specific kinds of tasks, or other such limitations which are all set by a human, or, for example, if. _ necessary, it could. be programmed to refrain from action, or require and wait for input from human decision-makers when the legality of a specific action is unclear. Indeed, appropriate human judgment is injected throughout the various phases of development, testing, review, approval, and decision to employ a weapon system. The end goal would be for the system's capabilities to be adapted to the operational complexities that it is expected to encounter, in a manner ensuring compliance with the Laws of Armed Conflict. In this regard, LAWS are not different from many other weapon systems which do exist today, including weapons whose legal use is already regulated under the CCW.

The HRW quote above of an Israeli official mirrors this: the systems are meant to assist in decision making, not to make decisions itself.

Utterly absent in HRW's one-sided, biased screed is the potential that AI can save people's lives and enhance compliance with the laws of armed conflict:

In our view, there is even a good reason to believe that LAWS might ensure better compliance with the Laws of Armed Conflict in comparison to human soldiers. In many ways, LAWS could be more predictable than humans on the battlefield. They are not influenced by feelings of pressure, fear or vengeance, and may be capable of processing information more quickly and precisely than a person. Experience shows that whenever sophisticated and precise weapons have been employed on the battlefield, they have led to increased protection of both civilians and military. forces. Thus, Lethal Autonomous Weapon Systems may serve to uphold in an improved manner, the ideals of both military necessity and humanitarian concern - the two pillars upon which the Laws of Armed Conflict rest.

Israel's history in incorporating human rights laws and protecting civilians in its development of weapons is unparalleled, with the possible exception of the US - and therefore not something that HRW wants anyone to know about.

Self-driving cars, while not perfect, have been shown to be much less likely to be involved in fatal accidents than humans. There is no reason to assume that weapons systems would be any different. It is clear that Israel's position is to develop such systems that will minimize the dangers to innocent people, all with appropriate oversight by human experts. There are still some areas that need to be worked out, like who is responsible for mistakes made by such systems, but these issues all mirror similar issues in complex wartime environments today.

Israel intends to use AI to minimize collateral damage, save lives and adhere to the laws of armed conflict better than humans can alone. These are positions that real human rights advocates should fully support. But because it is Israel, they instead assume that whatever the Jews invent must be to oppress Palestinian victims and they reflexively oppose it.

Which means that Human Rights Watch cares less about Palestinian lives than Israel does.

UPDATE: I coincidentally just came across an article by Marc Andreesen, legendary computer expert most responsible for creating the first popular web browsers Mosaic and Netscape, on his optimistic predictions for AI. He writes:

I even think AI is going to improve warfare, when it has to happen, by reducing wartime death rates dramatically. Every war is characterized by terrible decisions made under intense pressure and with sharply limited information by very limited human leaders. Now, military commanders and political leaders will have AI advisors that will help them make much better strategic and tactical decisions, minimizing risk, error, and unnecessary bloodshed.

No surprise that Israel and the experts are on the same page, while HRW tries to scare everyone by referring to any smart weapons system as "killer robots."

|

Or order from your favorite bookseller, using ISBN 9798985708424. Read all about it here! |

|